Causal analysis

One of the fundamental aims of scientific research is to reveal the operating mechanisms of a studied domain. These mechanisms consist of the causal relations that occur between entities of the domain. The discovered cause-effect relationships form a causal model of the domain, which ideally corresponds to the real mechanisms, and in most cases it is an acceptable approximation. A good causal model captures the main operating conditions in the simplest possible way. The detailedness of the model can be adjusted to emerging needs, and correspondingly the computational complexity also changes. Previously, this meant a strong constraint, however, the increase in computing capacity resulted in significant changes in this field. The hypothesis driven experimental design was replaced by computation intensive statistical data analysis, and robust statistical methods appeared, such as Bayesian statistical methods.

A further important change was that apart from the previously dominant passive observations, the results obtained from interventions received an increasingly active role. In terms of causal modeling the dominant view was that from mere observational data no causal relationships can be obtained (using classical statistical methods), only associations. Although the latter conveys relevant information, but the cause ? effect orientation is lost, and can only be clearly defined, if it is supported by an intervention experiment. However, this is often not feasible due to a number of possible obstacles, for example.: ethical, legal and financial constraints.

Bayesian methods, on the other hand, allow the extraction of causal relations from both intervention experiments and from data obtained by passive observations. Certainly, it remains true that in general causal relationships can not be inferred from examining the pairwise dependencies between two variables. The only exception is the case when one has preliminary (a priori) domain knowledge, since that may help to determine the cause ? effect orientation. (For example, if there exist two events A and B, of which we know that A definitely precedes B, then if there is dependence between A and B then a causal link A ? B is also is present.) In most cases, however, we do not have such background knowledge, so we may not infer a causal relationship. However, if several variables are examined together, then in case of certain dependency patterns some causal relations can be identified (Pearl J: Causality, 2000). So purely on the basis of observations some causal conclusions can be deduced, even if not in all possible cases.

The Bayesian network as a model class is one of the most appropriate means to represent causal models. In addition to the compact way the Bayesian network represents the joint probability distribution, it also allows the representation of dependencies between variables. Since it is a graphical model the dependency is represented qualitatively and also quantitatively by conditional probability parameters. In addition, given certain conditions (that are not detailed here), Bayesian networks, can be interpreted as causal models, in which case a directed edge between two nodes correspond to a causal relationship.

Understanding the connection between the three layers (MBM, MBS, MBG) of interpretation of Bayesian networks resulted in a general breakthrough in the fields of causal inference and induction. This enabled:

1. The normative treatment of observational, interventional and counterfactual inference, which plays a role of great importance in clinical decision support and health policy

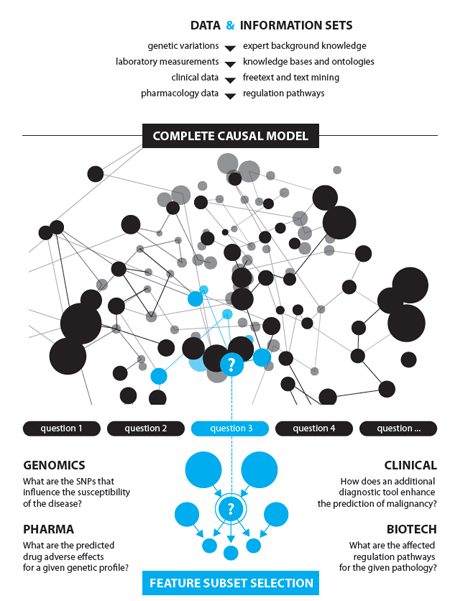

2. In case of a passive observation of a whole domain, (and taking advantage of the newest biomedical measurement techniques ) the identification of all the possible causal relationships given the constraints, and the learning of the most possible causal model

3. Exclusion of potential confounding factors in a non complete, but multivariate case of passive observation. This joint analysis of multiple variables enables the discovery of true causal relationships

4. The Bayesian learning of a complete causal model of a domain based on results from intervention experiments

5. The utilization of rich background information for the learning of complete causal models